Reproduced from medium.com

Computers are a staple of everyday life and are used in virtually every place in the economy and one’s daily life as of 2023. Computers are also a general purpose technology with the ability to contribute to almost any industrial sector. Running software on a computer consumes energy which contributes to climate change, depending on the source of energy (whether zero carbon or not). An open question is whether making a software program more efficient or faster can actually help to mitigate climate change or whether it will result in a phenomena called ‘backfire’ or computing (and energy) use greater than prior to the investment in computing efficiency.

Computing efficiency is different than energy efficiency, because the latter assumes the same functionality in a new, more efficient product. For example, if I switch from driving a gas-guzzling Lexus to an electric Tesla sedan, that’s energy efficiency because I am just switching from one car to another. However, computing efficiency refers to making a piece of software complete all of its instructions faster, while holding functionality constant. So the question is, would just building faster software lead to exponential growth in demand because the software is now easier to use and thus lead to a lot more energy consumption — negating any climate benefits? This is a proposition where the application of the software product really matters. The energy impacts of any piece of software depends on the application, economic demand for that application, as well as software design decisions.

In my Ph.D. research, I found energy efficiency does not lead to what’s called ‘backfire’ for energy-related services, like electricity, transportation, or heating because human wants are largely satisfied in these end uses in the U.S. using a technique called input-output life cycle assessment. Input-output life cycle assessment is a linear model to estimate economy-wide economic and environmental impacts using national/regional consumption or production accounts data. ‘Backfire’ is related to the concept of Jevons’ Paradox, introduced in 1865 by the English economist and logician William Stanley Jevons, who observed that as the more energy-efficient Watt steam engine was introduced, coal use in England exponentially increased, since the cost of coal was now lower. (Side note: On a longer timeframe, coal use in the U.K. has dramatically declined from 93 million tons in 1865 to about 8.6 million metric tons in 2021 due to economic competition from other energy resources). Of course, as people earn more money, they can and do use more energy, especially for air travel. But since time is limited, personal direct energy consumption and spending for transportation (and associated carbon emissions) tend to increase more slowly than income growth for most people as can be seen from data below from the 2022 U.S. Consumer Expenditure Survey. While absolute dollars spent on transportation (vehicles, expenses, and public and air transportation) increases with income, the percent of income spent on these items decreases with income. The exception is air travel — when someone’s income doubles, their spending on flights (and associated carbon emissions) often more than doubles, although this is a relatively small part of most people’s budgets.

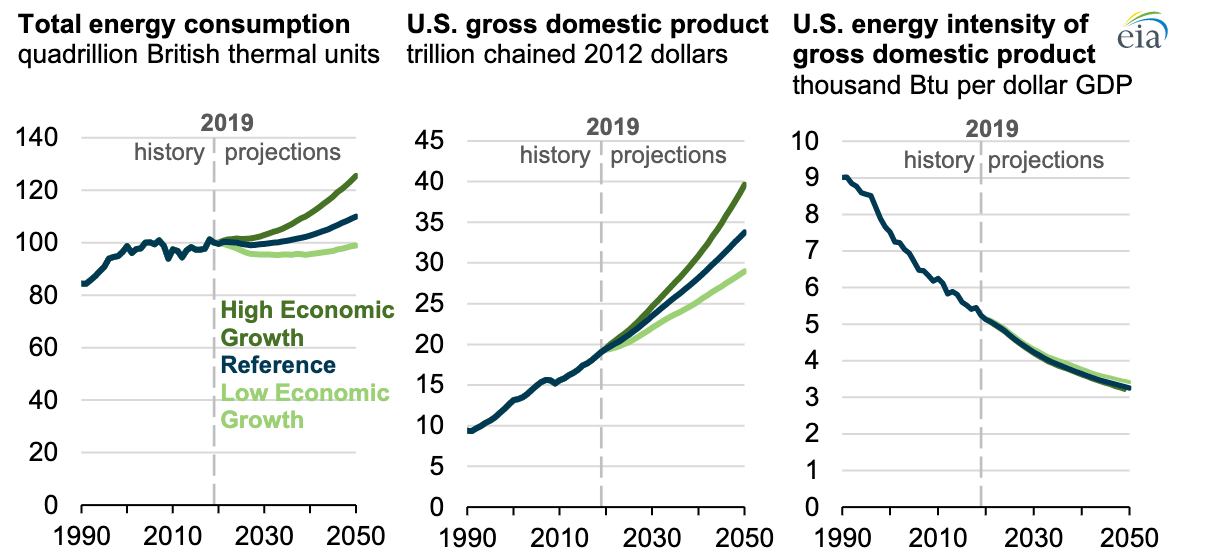

To understand the energy implications of computing efficiency, consider the total emissions of using the new software service compared to a brick-and-mortar alternative providing a similar service, if one exists. Almost any software application has a carbon impact when you consider a brick and mortar alternative, e.g. playing a video game or watching a movie replaces going to an arcade or a movie theater which likely would have required a building, utilities, and transit at minimum. Researchers have answered this question more broadly and indirectly, by showing that the total energy consumption of communications networks, personal computers, and data centers was about 4.7% of worldwide energy consumption. Also, at a macroeconomic level, overall energy intensity, or the energy consumption per unit of GDP, is steadily decreasing in the U.S., as seen in Figure 1, although with economic growth, U.S. overall energy consumption is expected to rise depending on economic growth rates and the pace of energy efficiency investments.

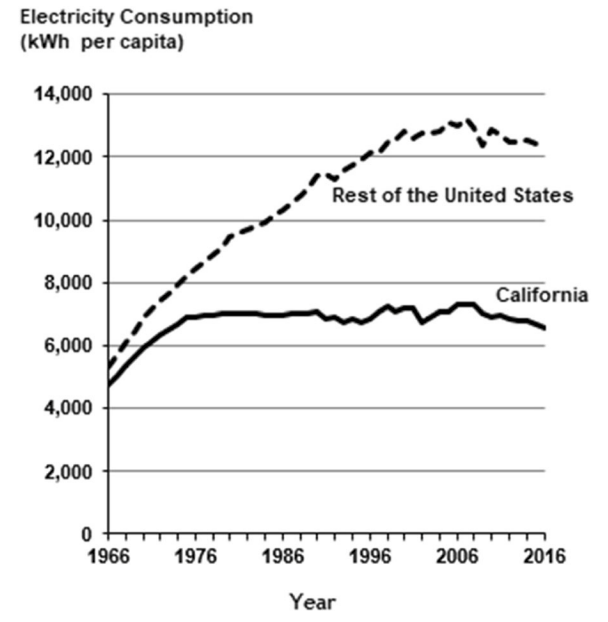

A similar effect occurs economy-wide, and country-level GDP is generally growing faster than country-level energy consumption. The energy intensity of economic activity in most countries is declining as general business services overtake raw materials extraction as a share of productive activity in the economy. This is especially true in California, where per capita residential electricity use has been relatively flat for nearly five decades (see Figure 2, a.k.a. the “Rosenfeld curve”) because of the state’s world-class investments in state-level building energy standards as well as because of its temperate climate, industrial structure, demographics, and other factors. We should expect residential electricity use to increase in the future in California as more people switch to electric vehicles and electric heat pumps to heat and cool their homes but these are examples of fuel switching and not a rebound effect in energy service demand due to energy efficiency.

The more focused answer to whether computing efficiency saves energy or not depends on factors specific to a software application and the hardware it runs on. Some software applications, like those supporting taxi services (self-driving or not) have the potential to reduce overall car ownership and increase utilization of every fleet vehicle. Taxi services potentially have environmental benefits due to less individual car use and fewer requirements for paved parking spaces in communities as well as economic benefits from full utilization of a fleet vehicle vs. a personal car that is mostly idle. However, taxi services, whether fully automated or not, do lead to more in-transit time with no passengers on-board to reach different customers compared to use of a personal vehicle. Quantifying the carbon emissions impacts of self-driving taxi software requires a full process life cycle assessment study which models and compares the cradle-to-grave carbon emissions (including production, manufacturing, use, and disposal) of the two options: personal car ownership vs. taxi services for a common unit of analysis, e.g. per passenger-mile driven. Researchers at the Australian National University-Canberra have done such a process life-cycle assessment study and found that when considering typical ‘in-transit with no passenger’ times for taxi services, this leads to higher per-passenger-km emissions for U.S. and European taxi services compared to personal car ownership or carpooling (see Figure 1). This means the main benefits of electric robotaxis are cost, convenience, safety, and avoiding the need for parking spots while imposing social costs like increased traffic and environmental pollution while fossil fuels are still used for electric power.

The energy implications of software also depends on overall demand for that application. For example, online shopping replaces individual vehicle trips to a brick-and-mortar store with the additional logistics required to transport goods from warehouses to post offices to the last mile to the customer’s home. Researchers at Carnegie Mellon University have found that online retail, including last-mile delivery to the customer’s home, results in 30% of the primary energy consumption and carbon emissions compared to traditional brick-and-mortar retail, by avoiding customer trips to the store in a conventional internal combustion engine vehicle. (With the increasing adoption of electric vehicles powered by 100% renewable energy, the benefits of online retail are primarily convenience and variety of products.) Potentially the overall demand for goods and services and associated energy consumption would increase if costs were lower with online shopping vs. brick-and-mortar retail.

In other applications, such as a photo-sharing or photo-hosting app, it’s arguable whether the application has any impact on energy use or whether they simply feed society’s seemingly unlimited demand for self-expression and culture. The low cost and ubiquity of cameras at our fingertips has also led to exponential growth of high-fidelity but low-compression photo data containing information that might have once been a note in a journal (e.g. desktop and phone screenshots). On the other hand, the digitization of business processes ranging from the Microsoft Office suite to digital tax services, online bookstores, and banking replaces countless reams of paper and demand for the services of the postal system. One can argue that this will lead to a ‘backfire effect’ in the demand for online business content. However since human time and attention is limited, and one hopes that economic competition will also temper this effect and businesses will naturally reduce the production of electronic documents and media in proportion to the human demand for viewing them. Some high-level software-related use cases identified by researchers that could potentially help to mitigate or adapt to climate change include:

- electricity supply and demand forecasting

- improving scheduling and flexible demand

- accelerating material science

- modeling transport demand

- shared mobility

- freight routing and consolidation

- modeling building energy demand

- smart buildings

Software Energy Use

Computing efficiency also depends on the design of the software and hardware it is running on and the use patterns of consumers. Energy consumption of software is especially important for mobile applications where excessive battery consumption results in a negative user experience and could lead users to potentially remove an application to conserve storage space. If the energy consumption of a piece of software is reduced by 50% and demand more than doubles, energy consumption will increase overall. Typically, the energy consumption of software isn’t directly what consumers are aware of or responding to. Instead, it is the design, functionality, ease of use/experience, and performance/speed that drives adoption of a new software service. The speed experienced by a user for most software can be increased by parallelizing code and assigning more networked CPUs at a problem with modern cloud computing (for the back-end web server and software calculations, not for the client-side user interface) or through true software design optimization with a more efficient (i.e. faster) algorithm, so it is possible to separate the demand for software services from the energy consumption of the software. Tips to reduce energy consumption of software include:

1. Virtualize - Virtualize all components of the system to allow sharing of the hardware infrastructure.

2. Measure - Put measurement infrastructure in place to determine energy KPIs in operation.

3. Refresh hardware - Replace older hardware by new hardware that offers higher capacity at lower consumption rate.

4. Reconsider availability - Consider tuning down availability requirements that lead to under-utilization.

5. Optimize performance - Optimize the system for performance to reduce capacity demands at peak workloads.

6. Use energy settings - Use energy-efficient settings offered by hardware and virtualization layer.

7. Experiment - Dare to experiment with alternative designs and configurations.

8. Limit over-dimensioning - Dimension the system to actual current needs, not to hypothetical future needs.

9. Deactivate environments - Activate test and fail-over environments only on demand, not continuously.

10. Match workload - Know your workload and dynamically scale the system to match it.

Source: Network World, 2014

In addition, simple redesigns of commonly used software could potentially help to reduce energy consumption and processing requirements — e.g. quitting apps or browser tabs by default when not in use. With the release of new AI-based tools like ChatGPT and GPT-X, there’s also an opportunity to displace the 0.3 Whs of energy per web search if these tools require less energy.

In summary, computing efficiency may lead to a Jevon’s Paradox by design — faster, better performing code that leads to a better user experience may lead to exponential growth in demand for that software service, especially in a new product with untapped demand. However, if that software is designed to be energy-efficient and supports an application that replaces an inefficient brick-and-mortar or transportation-heavy alternative, that’s still good for the environment and solving climate change.